Root-finding Algorithms and Graphical Interpretations

In many quantitative disciplines, problems can often be framed as the task of finding the value — or set of values — that make some expression equal zero. For example, we may want to find the theoretical break-even point of a firm (when profit is equal to zero) or under what conditions a dynamical system is at equilibrium (when the rate of change of the system is zero).

Formally, we can express this for some real-valued function $f$ as the value(s) $x^\ast\in \mathcal{X^\ast}$ such that $\forall x^\ast\in\mathcal{X}^\ast\subseteq\mathcal{X},f(x^\ast)=0$, where $\mathcal{X}$ is the function domain. The inputs that satisfy this condition are called the roots (or zeros) of $f$, and while finding the roots of an equation can, in some cases, be trivial (e.g. in the case of the linear equation $f(x)=mx+c=0$, $x^\ast=-\frac{c}{m}$), functions of interest tend to be nonlinear in practice, meaning there is no "cookbook" recipe to solve for $\mathcal{X}^\ast$.

The lack of a closed-form solution means we must turn to numerical approximation methods — called root-finding algorithms — to find values tolerably close to a function's real roots via iterative refinement. In this post, I'll briefly explore four well-known root-finding algorithms and showcase their graphical interpretations, before briefly comparing the methods.

To demonstrate each algorithm, we will use the function $f(x)=\sin (x) - 2x^3 + 3x^2 + x + 1$, a nonlinear function with $x^\ast\approx 1.992$ that looks like the following:

func = lambda x : np.sin(x) - 2*(x**3) + 3*(x**2) + x + 1

func_prime = lambda x : np.cos(x) - 6*(x**2) + 6*x + 1

x_vals = np.arange(-3,3,.1)

plt.plot(x_vals, func(x_vals))

plt.title(r'$f(x)=\sin (x) - 2x^3 + 3x^2 + x + 1$')

plt.axhline(0, lw=1, linestyle='dashed', color='black')

plt.xlabel(r'$x$')

plt.ylabel(r'$f(x)$')

plt.show()

Methods¶

Bisection method¶

The bisection method can be thought of as a graphical analog to binary search — the general process is to define a lower and upper bound between which we believe $x^\ast$ lies, and iteratively halve our search space while evaluating our function and checking for over/under-shooting to adjust the lower/upper bound as necessary.

The algorithm for the bisection method is therefore:

- Begin with two guesses $a,b$ such that we believe $x^\ast\in(a,b)$

- Calculate $x_{\text{mid}}=\frac{a+b}{2}$ as the midpoint between $a$ and $b$

- If $f(x_{\text{mid}})<0$, then we know we are too far to the left of $x^\ast$, so update the lower bound $a\gets x_{\text{mid}}$

- If $f(x_{\text{mid}})>0$, then we know we are too far to the right of $x^\ast$, so update the upper bound $b\gets x_{\text{mid}}$

- Repeat steps 2–4 until we either exceed the maximum number of iterations, or changes in $x_{\text{mid}}$ are vanishingly small (convergence)

- Return $x_{\text{mid}}$

In Python, we can write this as:

def bisection(f_func, interval, tol=1e-9, max_iter=1e4, verbose=False, steps=False):

a, b = interval

counter = 0

assert f_func(a) != f_func(b), "Function evaluates to same value at (a) and (b)"

max_iter = int(max_iter)

steps_arr = []

for i in range(max_iter):

mid_point = (a+b)/2

if verbose: print(mid_point)

if abs(b-a) < tol or abs(f_func(mid_point)) < tol:

if steps:

return mid_point, steps_arr

return mid_point # if reached below tolerance

if steps: steps_arr += [mid_point]

# reassigning interval start/end

if np.sign(f_func(mid_point)) == np.sign(f_func(a)):

a = mid_point

else:

b = mid_point

counter += 1

raise ValueError("No convergence")

And an initialization of the bisection method with the lower and upper bounds $a=1.5,b=2.5$ looks like:

To refine our guess for the second iteration, we calculate $x_{\text{mid}}=\frac{1.5+2.5}{2}=2.0$, and we find that $f(2.0)<0$, so we update $b\gets 2.0$ (denoted below as $b^\prime$).

When evaluated, we can see the algorithm attains an approximation for $x^\ast$ in 30 steps:

bisection(func, (1.5, 2.5), verbose=True)

Newton's method¶

Newton's method works by approximating the target function as a straight line at each iterative guess $x_i$, and uses the root of the straight line approximation as the next iterative guess $x_{i+1}$.

A correct observation is that in order to do this, we need to be able to calculate the slope of the function at any point, i.e., we need the first derivative $f'(x)$ of the target function. By linearly approximating the function at each guess, the idea is that the $x$-intercept (root) of each line at $x_i$ will approach $x^\ast$ as iterations progress, since each slope will more accurately "point" to where the true $x$-intercept lies.

We can derive Newton's method by starting with the graphical intuition: with the same target function as above, if we choose $x_0=2.75$ and draw the slope of $f$ at this point, the motivation for Newton's method becomes slightly more clear:

Examining the right-angle triangle formed by $(x_0,f(x_0))$, $(x_0,0)$, and $(x_1,0)$ we can perform some trigonometry to analytically solve for $x_1$:

If we denote $x^\star_0=(x_0,0),x^\star_1=(x_1,0),x^\star_f=(x_0,f(x_0))$, then consider $\angle x^\star_0x^\star_1x^\star_f$, the angle between the tangent line and $x=0$. If we note that the tangent of this angle is equivalent to the slope of the target function at $x_0$, we can analytically obtain $x_1$:

$$\begin{align*} \tan(\angle x^\star_0x^\star_1x^\star_f)&=\frac{f(x_0)}{x_0-x_1}=f'(x_0)\space\text{by definition} \\ \therefore x_1 &= x_0-\frac{f(x_0)}{f'(x_0)} \end{align*}$$which can be generalized to:

$$x_{i+1} = x_i - \frac{f(x_i)}{f'(x_i)}$$So we derive Newton's method as just:

- Obtain the derivative of our function $f'(x)$ and choose an initial guess $x_0$

- Update $x_{i+1}\gets x_i-\frac{f(x_i)}{f'(x_i)}$

- Repeat step 2 until maximum iterations reached or convergence

We can write this in Python as (assuming we have the first derivative):

def newton(a_func_arr, x_0, tol=1e-9, max_iter=1e4, verbose=False, steps=False):

a_func, a_func_d = a_func_arr

max_iter = int(max_iter)

x_prev = x_0

counter = 0

steps_arr = []

for i in range(max_iter):

if verbose: print(x_prev)

f_last = a_func(x_prev)

assert a_func_d(x_prev) != 0, "Derivative evaluates to zero"

fp_last = a_func_d(x_prev) # getting f(x) and f'(x)

x_cur = x_prev - (f_last/fp_last)

if steps: steps_arr += [x_cur]

# checking tolerance

if abs(x_cur - x_prev) < tol or abs(f_last) < tol:

if steps:

return (x_cur, steps_arr)

return x_cur

x_prev = x_cur # reassigning previous x_i

counter += 1

raise ValueError("No convergence")

And in the above example, the second iteration for the above example is the following:

Where we can observe that $x_2$ is clearly closer to $x^\ast$ than $x_1$ or $x_0$.

With an initial guess of $2.75$, Newton's method exploits the curvature of the function closer to its root and approximates $x^\ast$ in just five iterations:

newton((func, func_prime), 2.75, verbose=True)

Secant method¶

A secant in geometry is a line that intersects a curve at two or more points — the secant method iteratively constructs these lines that intersect the target function by beginning with two guesses, $x_0$ and $x_1$. Then, the root of this secant $x_2$ is used to construct the next secant, and so on until convergence.

Again, it's easier to visualize the strategy to derive the equation form:

With $x_0$ and $x_1$, we can construct the secant line $g(x)$ intersecting the two points $(x_0, f(x_0)), (x_1, f(x_1))$ using a linear equation. The gradient of this line is simply $\frac{f(x_1)-f(x_0)}{x_1-x_0}$, and the line is horizontally shifted by $x_0$ and vertically by $f(x_0)$ since we know it passes through the point $(x_0, f(x_0))$. Ultimately, we have:

$$\begin{align*} g(x)=\frac{f(x_1)-f(x_0)}{x_1-x_0}(x-x_0) + f(x_0) \end{align*}$$and the root of this secant line is when $g(x)=0$, which we can rearrange with some algebra to derive an expression for $x$:

$$\begin{align*} g(x)=0&=\frac{f(x_1)-f(x_0)}{x_1-x_0}(x-x_0) + f(x_0) \\ &\iff -\frac{f(x_0)}{(x-x_0)}=\frac{f(x_1)-f(x_0)}{x_1-x_0} \\ &\therefore x=x_0-f(x_0)\frac{x_1-x_0}{f(x_1)-f(x_0)} \end{align*}$$which is generalized to the iterative formula:

$$x_{i+1} = x_{i-1}-f(x_{i-1})\frac{x_i-x_{i-1}}{f(x_i)-f(x_{i-1})}$$Thus, we can define the secant method as the following process:

- Begin with two initial guesses $x_0,x_1$

- Calculate the root of the line between $x_{i},x_{i-1}$ by $x_{i+1} = x_{i-1}-f(x_{i-1})\frac{x_i-x_{i-1}}{f(x_i)-f(x_{i-1})}$

- Repeat step 2 until maximum iterations reached or convergence

And the process can be represented in Python as:

def secant(a_func, x_arr, tol=1e-9, max_iter=1e4, verbose=False, steps=False):

"""This function uses the secant method to approximate a root/zero of a function.

Input: function, initial guesses x0, x1, optional absolute error tolerance, and maximum number of iterations"""

max_iter = int(max_iter)

x_0, x_1 = x_arr

x_pprev = x_0

x_prev = x_1

counter = 0

steps_arr = []

for i in range(max_iter):

if verbose: print(x_prev)

numerator = a_func(x_pprev) * (x_prev - x_pprev)

denominator = a_func(x_prev) - a_func(x_pprev)

assert denominator != 0, "Division by zero encountered in computation in secant method"

x_cur = x_pprev - (numerator/denominator)

if steps: steps_arr += [x_cur]

# checking tolerance

if abs(x_cur - x_prev) < tol or abs(x_cur) < tol:

if steps:

return (x_cur, steps_arr)

return x_cur

x_pprev = x_prev

x_prev = x_cur

counter += 1

raise ValueError("No convergence")

The second iteration of the secant method for our example uses $x_2$ and $x_1$ to derive the new $x_3$:

And with $x_0=1.5,x_1=2.5$, the secant method takes seven steps to converge:

secant(func, (1.5, 2.5), verbose=True)

Steffensen's method¶

Finally, Steffensen's method is similar to Newton's method, but does not require the first derivative: instead, a finite-difference approximation for the gradient is derived:

$$f'(x)\approx \frac{f(x+h)-f(x)}{h}$$where $h=f(x)$ is chosen to yield

$$f'(x)\approx \frac{f(x+f(x))}{f(x)}-1=g(x)$$While this approximation is often successful, it relies on the assumption that the target function $f(x)$ has a slope that approximately satisfies $-1<f'(x^\ast) <0$, along with the approximated slope, $-1<g(x^\ast)<0$. Steffensen's method uses this approximation of the first derivative as a method for correction, so in cases where the assumption doesn't hold, it will often fail to approach $x^\ast$.

When substituting the approximate first derivative $g(x)$ in Newton's method, we yield the iteration step for Steffensen's method:

$$x_{i+1}=x_{i}-\frac{f(x_i)}{g(x_i)}$$Therefore, the process for Steffensen's method is:

- Begin with an initial root guess $x_0$

- Obtain a new root approximation by $x_{i+1}=x_{i}-\frac{f(x_i)}{g(x_i)}$

- Repeat until convergence or maximum iterations reached

Which can be represented in Python as:

def steffensen(a_func, x_0, tol=1e-9, max_iter=1e4, verbose=False, steps=False):

max_iter = int(max_iter)

counter = 0

g_func = lambda x : a_func(x + a_func(x))/a_func(x) - 1

x_prev = x_0

steps_arr = []

for i in range(max_iter):

if verbose: print(x_prev)

x_cur = x_prev - (a_func(x_prev)/g_func(x_prev))

if steps: steps_arr += [x_cur]

# check tolerance

if abs(x_cur - x_prev) < tol or abs(x_cur) < tol:

if steps:

return (x_cur, steps_arr)

return x_cur

x_prev = x_cur

counter += 1

raise ValueError("No convergence")

And when applied to our example function $f(x)$, the first two iterations look like:

And Steffensen's method approximates the root of our target function in 12 steps:

steffensen(func, 2.2, verbose=True)

Comparison¶

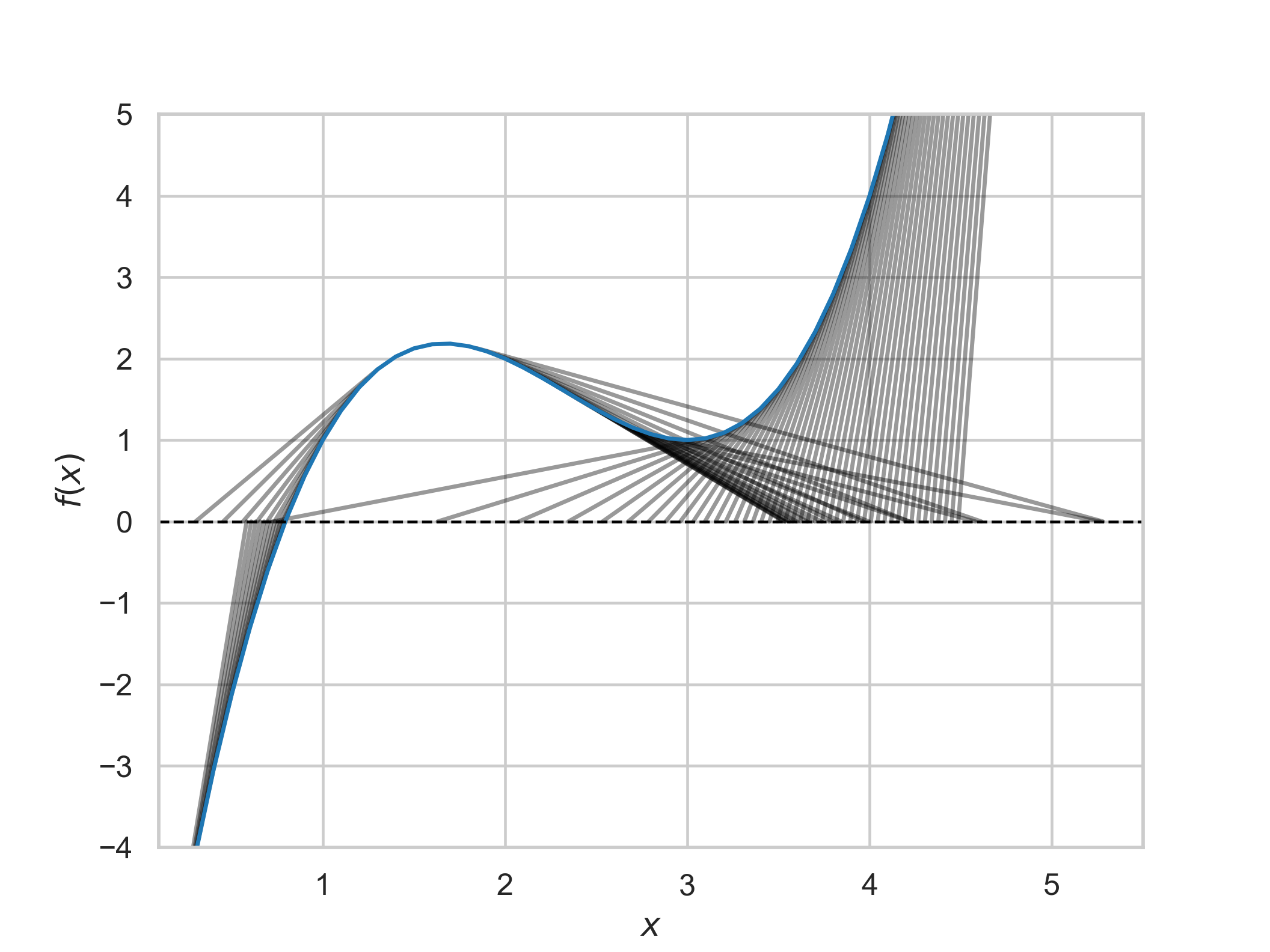

Behavior of convergence varies among the methods: for example, we can compare how each method iteratively refines its estimate $\hat{x}^\ast$ of the true root $x^\ast$ for our function with each iteration:

where the vertical dashed lines represent the iteration number for convergence.

While all methods converge in under 30 steps, this behavior is sensitive to choices of $x_0, x_1$ — for example, widening the initial guess range produces the following:

gen_steps_and_plot(func, func_prime, -10, 1, 10)

To get a wider feel for method behavior, we can look at three additional functions:

$$ \begin{align*} &f_1=\sin(x)-e^{-x}+5x^{3}-x^2;\space\space f_1^\prime=x(15x-2)+e^{-x}+\cos(x) \\ &f_2=x^3 + \frac{1}{1+2x^2} - x;\space\space f_2^\prime = 3x^2 - \frac{4x}{(1+2x^2)^2} - 1 \\ &f_3 = 5x^4 - 4x^2 + 2x - 3; \space\space f_3^\prime = 20x^3 - 8x + 2 \end{align*} $$which are, in Python:

f1 = lambda x : np.sin(x) - np.e**(-x) + 5*(x**3) - x**2

f1d = lambda x : x*(15*x - 2) + np.e**(-x) + np.cos(x)

f2 = lambda x : x**3 + 1/(1+2*(x**2)) - x

f2d = lambda x : 3*(x**2) - (4*x)/((1+2*(x**2))**2) - 1

f3 = lambda x : 5*(x**4) - 4*(x**2) + 2*x - 3

f3d = lambda x : 20*(x**3) - 8*x + 2

And we can plot the functions like so, defining $x_0$ and $x_1$ for each:

For fun, we can create a table to show how each method handles each function in terms of time (in seconds, and number of iterations):

And averaging times over multiple runs:

With distributions for number of iterations to convergence:

An immediately interesting observation is that Steffensen's method is the only method to converge to the negative root of $f_3$ — this is explained by the derivative for $f_3$ being much larger than zero:

f3d(fsolve(f3,1)[0])

meaning the correction method used by Steffensen's method doesn't lead it to the root at $1.0$.

Another quirk is that the recorded times for $f_1$ seem to dominate those of $f_2$ and $f_3$ — this is because $f_1$ is more expensive to compute, so even though the bisection method takes the same number of iterations to converge for $f_1$ and $f_2$, the runtimes for $f_1$ are much higher than those for $f_2$.

This also impacts the performance of Newton's method compared to the secant method for $f_2$ — the average time for the secant method to converge is smaller than that of Newton's method, possibly because computing the first derivative for $f_2$ is expensive (which is not used by the secant method):

# mean of secant method - mean of Newton's method

np.mean([j[2] for j in method_res[1][2]]) - np.mean([j[2] for j in method_res[1][1]])

Conclusion¶

Of course, different root-finding algorithms have trade-offs outside of convergence and complexity — if you're interested, further reading can be found here. Some methods presented here may be inappropriate for certain situations where the function is not differentiable (Newton's method), or where the first derivative cannot be sufficiently approximated (Steffensen's).

Most modern software that automates the root-finding approximation process does so with more complicated methods than those examined in this post — for example, SciPy's fsolve uses what appears to be a modified version of Powell's method, which does not require the target function to be differentiable.

That being said, the above root-finding algorithms are useful for a foundational knowledge of underlying systems for optimization, simulation, and solving systems of equations. Perhaps more importantly, though, they are just plain interesting, and hopefully the visualizations in this post inspired some intuition for how these root-finding algorithms work.